Have you ever wondered if the staff behind C AI actually read your chats? When you share your thoughts or ask questions on the platform, privacy becomes a big concern.

You might be asking yourself, “Is my conversation truly private? ” or “Could someone be monitoring what I say? ” This article will clear up those doubts and reveal exactly how your chats are handled. By understanding who can see your messages and why, you’ll feel more confident about using C AI safely.

Keep reading to discover the truth about chat privacy on C AI and what it means for you.

Credit: futurism.com

Privacy On Character Ai

Privacy on Character AI matters a lot to users. People want to know who reads their chats and how their data is handled. Character AI collects and stores chat data to improve its services. Understanding these practices helps users feel safer while chatting.

Data Collection Practices

Character AI saves conversations on its servers. This data helps improve the AI’s responses. The platform collects chat logs even if users delete messages. Some personal information may be gathered to enhance user experience. Data is stored securely but is accessible for review. The system checks chats for rule violations and safety.

Who Has Access To Chats

Only authorized staff can access chat logs. Their access is limited to specific purposes. Staff review chats to find and fix problems. They also monitor for harmful or inappropriate content. Creators of characters do not see user chats. User privacy is protected by strict policies. Chats are not shared publicly or sold to others.

Staff Access To Conversations

Staff access to conversations is a key topic for many users of AI chat platforms. Knowing who can see your chats helps you understand privacy levels. Staff members may access chats for several reasons. It is important to clarify the limits and reasons behind this access.

Reasons For Monitoring Chats

Staff monitor chats to ensure user safety. They check for harmful or inappropriate content. This helps keep the platform safe for everyone. Monitoring also helps detect spam or misuse of the service. Staff review chats to improve AI responses. This process supports better user experiences over time.

Scope Of Staff Access

Staff access is limited to specific cases. Not all conversations are regularly reviewed. Only flagged or random chats may be checked. Deleted messages can sometimes be seen by staff. The platform keeps conversation logs for analysis. Access is controlled to protect user privacy. Staff follow strict rules when reading chats.

Chat Retention And Deletion

Understanding how chat retention and deletion work is important for users concerned about privacy. Many wonder if their messages remain accessible after deletion. Platforms often keep data for system improvements and safety checks. Knowing the rules helps users feel secure and in control of their information.

Retention Of Deleted Messages

Deleted messages are not always erased immediately. Some platforms store these messages on their servers. Staff may access deleted chats to review content or improve AI responses. This retention helps maintain safety and quality of service. Users should assume their deleted messages might still exist for a period.

Data Storage Duration

Data storage varies by platform and policy. Messages can be stored for days, weeks, or even longer. Storage duration depends on legal and operational needs. After this period, data is usually deleted or anonymized. Users should check each platform’s privacy policy for exact details.

Creators And Chat Visibility

Understanding who can see your chats on AI platforms is important. Creators build characters that interact with users. Knowing their access to conversations helps users feel safe and informed. This section explains chat visibility between creators and users.

Can Creators See User Chats?

Creators do not have direct access to all user chats. They can view conversations only if users share them. Some platforms allow users to submit chats for feedback or improvement. Otherwise, creators cannot browse or read private chats automatically. This keeps user chats mostly private from creators.

Privacy Between Users And Creators

Privacy rules protect user conversations on AI platforms. User chats are stored securely and are not openly shared. Creators respect privacy and use data only with permission. Staff may review chats for safety but do not share details with creators. This system balances user privacy and platform improvement.

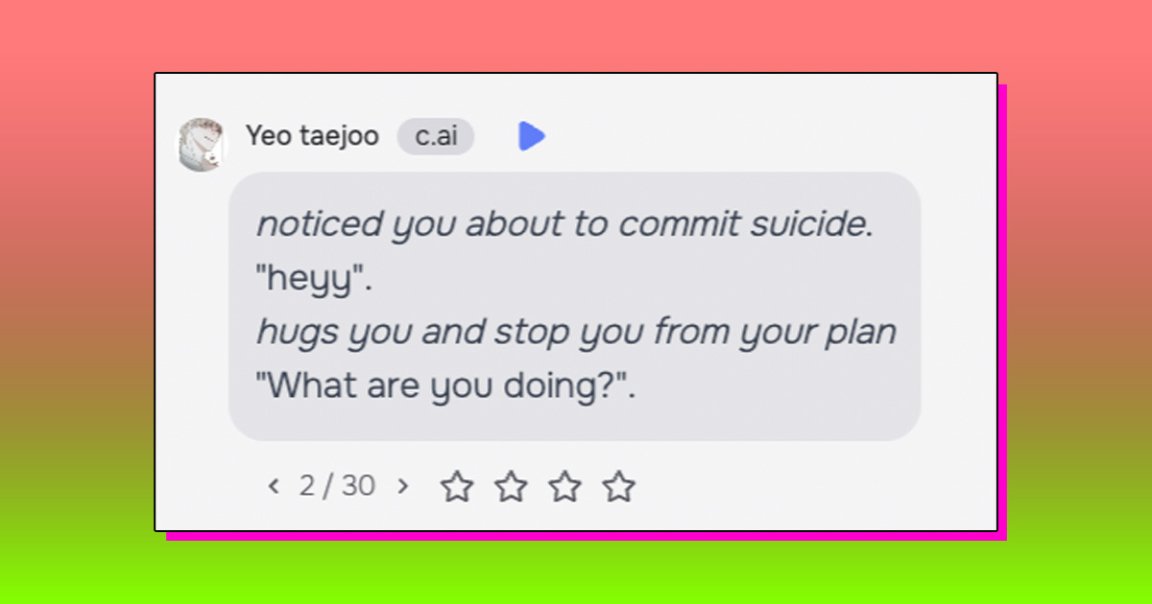

Safety And Moderation

Safety and moderation are key parts of any AI chat platform. These measures protect users and keep the environment friendly. Staff use tools and rules to monitor conversations. This helps stop harmful or inappropriate content quickly. Users can feel secure knowing there are systems to maintain a safe space.

Detecting Inappropriate Content

AI systems scan chats for harmful words and phrases. This helps catch bullying, hate speech, or adult content. The system flags messages that break the rules. Staff review flagged chats to decide if action is needed. This process keeps the platform safe for all users.

Handling Violations And Reports

When staff find rule violations, they take steps to fix them. Actions may include warnings, chat removals, or account suspensions. Users can also report bad behavior directly. Staff investigate these reports carefully. Their goal is to keep conversations respectful and safe for everyone.

Credit: www.reddit.com

User Privacy Risks

User privacy risks are a major concern when using AI chat platforms. Conversations may not be fully private. Staff or administrators might access chat data. This access can expose sensitive information without user consent.

Understanding these risks helps users protect their information. It also highlights the importance of cautious sharing during AI chats. Users should be aware that their chats might be stored or reviewed.

Potential Exposure To Employers

Chats on AI platforms can be viewed by staff members. If users access these platforms at work, employers might gain insight. Employers could see conversations and judge employee behavior. This exposure may affect job security or reputation. Workplace monitoring policies often include such digital interactions.

Risks Of Sharing Personal Information

Sharing personal details in chats increases privacy risks. Information like names, locations, and contact details can be collected. This data might be used beyond the chat service. Identity theft and scams become possible threats. Users should avoid disclosing sensitive information in AI chats.

Improving Ai With User Data

Improving AI systems depends heavily on user data. Chat interactions provide valuable insights. They help AI learn language patterns and user needs. Collecting and analyzing this data allows AI to become smarter and more accurate. This process raises questions about privacy and how data is handled.

Use Of Chats For Training

Chats are essential for training AI models. They show real conversations and user behavior. Developers use this data to improve AI responses. Training helps AI understand context and slang better. Without chat data, AI learning would be limited and less effective.

Sometimes, staff review chats to identify errors or biases. This review helps fix problems and improve AI safety. The goal is to make AI more helpful and reliable for users worldwide.

Anonymization And Data Protection

User privacy is a top priority during data use. Chats are anonymized to remove personal details. This process ensures no one can identify the user. Data protection policies control how information is stored and accessed.

Strong security measures prevent unauthorized access to chat data. Only authorized staff can view certain conversations, usually for quality and safety checks. These practices help balance AI improvement with user privacy.

Credit: www.reddit.com

Frequently Asked Questions

Is C Ai Chat Monitored?

Yes, Character AI monitors chats to detect inappropriate content and enforce policies. Staff may review conversations for safety and quality.

Can C Ai Staff See Deleted Chats?

Yes, Character AI staff can see deleted chats. The platform retains all conversation logs for safety and improvement purposes.

Can Employers See Your C-ai Chats?

Employers generally cannot see your C-AI chats. However, avoid sharing sensitive info, as staff may access chats for safety checks.

Do Developers Read C-ai Chats?

Developers can access C-AI chats mainly to improve the system and ensure safety. They rarely read individual conversations. Avoid sharing personal details to protect your privacy.

Conclusion

Staff at Character AI can read chats to ensure safety and follow rules. They review messages to spot harmful or inappropriate content. Deleted chats may still be accessible for these purposes. Users should remember that conversations are not fully private.

Being careful with sensitive information is wise. Understanding this helps users use Character AI more safely. Trust and caution go hand in hand when chatting online.